What is ControlNet

ControlNet is a technique used in Stable Diffusion, which is an extension of OpenAI’s diffusion models.

It is now hard to control what kind of images we get, but ControlNet allows users to precisely control the diffusion process, enabling the manipulation of the output of the model by conditioning it on specific attributes, such as text prompts or images. This helps in generating more tailored and contextually relevant outputs from the model.

There are mainly 5 main ControlNet

OPenPose

By incorporating OpenPose into ControlNet, the model can better understand and interpret human body poses, enabling more accurate and contextually relevant generation of text or images based on these poses.

his integration enhances the capabilities of ControlNet by providing it with richer input data, resulting in more nuanced and tailored outputs.

For example, this is the image and its conresponding image interpreted by Stable Diffusion

Here is the image that we want the model follows:

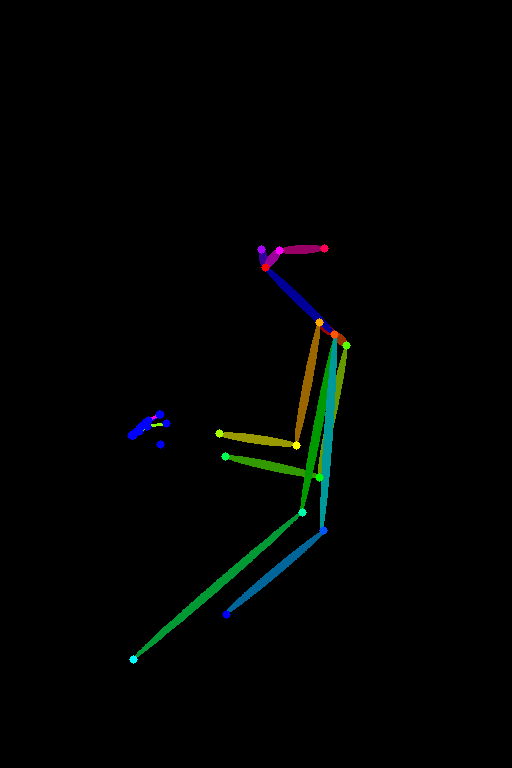

We can use preview function to see how Stable Diffusion intrepret this image:

It kind of summarizing the image pose and tell us what it will take care of.

Prompt: masterpiece,best quality,1 girl,seaside,beach,full body,beautifully detailed background,cute anime face,masterpiece,color clothing,whole body,glitch lump on face,ultra-detailed,extremely delicate and beautiful,album cover,clear lines,squares,bright,limited palette,chahua,

Negative Prompt: NSFW,logo,text,blurry,low quality,bad anatomy,sketches,lowres,normal quality,monochrome,grayscale,worstquality,signature,watermark,cropped,username,out of focus,bad proportions,

Output:

👍 It maintains the pose perfectly in the final pictures, especially this kind of function can help us using images to express what we want. It is actually hard to use words to describe what we want

Depth

By integrating depth data, ControlNet gains a better understanding of the three-dimensional structure of the scene, allowing for more accurate and contextually relevant generation of text or images.

This integration enables ControlNet to produce outputs that are not only visually accurate but also spatially coherent, resulting in more realistic and immersive generated content.

Here is the image that we ask it to follows.

If we use Depth, and the prompts are: female character,close-up,peaceful expression,flowing black hair,wind effect,home,piano,minimalistic floral pattern,subtle texture,soft shading,gentle brush strokes,serene,simple attire,tranquil mood,ethereal aesthetic,whimsical,light and shadow play,

Negative Prompts: extra legs,bad feet,cross-eyed,mutated hands and fingers,extra limb,extra arms,mutated hands,poorly drawn hands,malformed hands,too many fingers,fused fingers,missing fingers,acnes,missing limb,bad hands,malformed limbs,floating limbs,skin spots,disconnected limbs,skin blemishes,poorly drawn face,disfigured,ugly,(fat:1.2),Multiple people,(fat:1.2),bad body,mutated,long body,mutation,deformed,long neck,(fat:1.2),

This is how ‘depth’ understands the image. It shows the different layers of the image, especially her hands position.

This is the output:

So great! Especially when we compare it to OpenPose. OpenPose cannot distinguish when one hand is in front of the other.

Here is what OpenPose gives us. It is definately cannot show what we do

Conclusion

In conclusion, the integration of ControlNet into Stable Diffusion enhances the model’s capabilities by providing more precise control over the generation process.

By incorporating techniques such as OpenPose and depth information, ControlNet enables the model to produce more contextually relevant and realistic outputs.

ControlNet represents a significant advancement in the field of generative models, and we will explore more in the next post!